The State of Confidential AI in 2025: Who Wins and Who Loses

A new generation of tools is emerging to address AI's most critical blind spot: the moment sensitive data is being used.

Every AI will soon handle sensitive data (or already does).

The real question is: can it do so without exposing it?

When an AI processes your information, like a health diagnosis or financial data, it's more exposed than you think. While data is safe when it's stored or being sent, it becomes vulnerable when the AI uses it. To process your data, the AI has to decrypt it, making it readable and an easy target for malware or hackers.

And if this is risky in traditional systems, it’s far worse on public blockchains.

In a decentralized infrastructure, most data is not only exposed while being used, but it’s replicated across thousands of nodes. Financial transactions, smart contract inputs, game logic, and identity data are globally visible by default.

This level of transparency breaks privacy.

Traders can be front-run.

Strategies can be copied.

Positions can be exploited in real time.

And for non-financial use cases like decentralized identity or credit scoring, full transparency makes compliance and confidentiality virtually impossible.

This is part of a larger movement toward programmable privacy, where technology is used to not just hide data, but to let different groups securely use and benefit from it without exposing the raw information. Archetype’s “Privacy 2.0” report highlights this with the concept of private shared state, where multiple parties can work on a sensitive dataset while keeping it confidential.

This is exactly what Confidential AI aims to do: securely close the "in-use" gap so sensitive AI workloads can run with verifiable security.

This article explores how that gap is being closed. We’ll break down the core technologies making Confidential AI possible, examine their trade-offs, and look at who’s already using them in production. Along the way, we’ll see why 2025 marks the moment when private, real-time AI at scale stopped being a theory and started becoming infrastructure.

Confidential AI: Closing the “In Use” Gap

Confidential AI addresses that exact weak point: the moment your data is most vulnerable, during inference (the stage where an AI model processes your input to generate a result, like a prediction or answer).

It keeps your data sealed, not just before and after, but while the model is actively running. That includes:

Inputs: medical scans, investment strategies, personal messages

Models: the proprietary LLMs trained by OpenAI, Anthropic, and Meta

Outputs: the answer, recommendation, or prediction the model produces

The core idea: run the model inside a sealed, tamper-proof environment so that nobody, not even the infrastructure provider, can inspect what’s going on inside.

Why Confidential AI Matters Even More Now

Enterprise spending on LLM projects has moved beyond the pilot stage. According to a June 2025 survey of 100 global CIOs, AI budgets have grown by approximately 75% year over year and are now treated as core IT expenses, no longer limited to experimental initiatives or short-term innovation funds.

Use‑cases driving the spend:

Instant paperwork: In hospitals, AI tools turn doctor–patient conversations into structured medical notes automatically, cutting hours of manual typing.

Front‑line chat & support: Companies use low-cost, open-source AI to handle common questions and save expensive premium AI models for tricky or unusual cases. This mix has lowered customer service costs by 20–40%.

Faster creative work: Movie studios use AI video tools to finish visual effects in hours instead of weeks, speeding up production without replacing creative teams.

These use cases are driving AI deeper into core business workflows. But to unlock even more value, enterprises need to combine powerful models with private data securely. That’s where private AI data marketplaces come in.

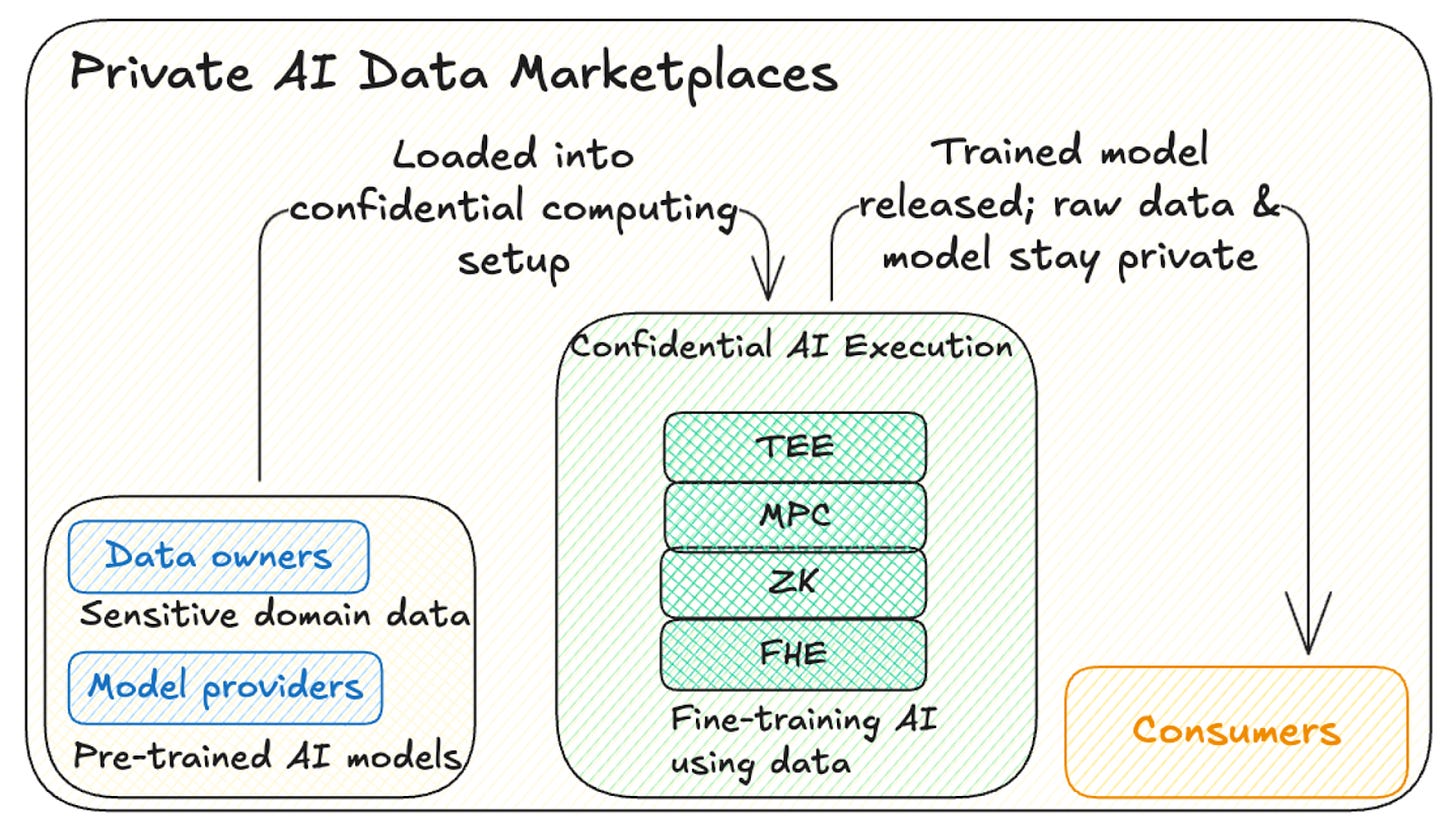

Private AI Data Marketplaces: Next Big Frontier

A model vendor (e.g., OpenAI) supplies a high-quality base model.

Independent data owners contribute specialized datasets like medical scans, satellite imagery, or customer conversations, to fine-tune the base model for their specific domain.

Why confidentiality is critical: Model parameters are the vendor’s crown jewels; raw datasets are the data owner’s competitive edge. Both must stay hidden while the fine-tuning runs, yet each side still needs cryptographic proof that the other behaved correctly.

What enables it: Confidential computing let the base model and the private dataset meet inside a sealed enclave, perform the fine-tune, and export only the agreed-upon weights: no peeking, no leakage.

Without that guarantee, neither the model provider nor the data provider is willing to participate, and the marketplace stalls.

With it, entirely new data-for-model economies become viable, opening another major budget line in the 2025 AI spend surge.

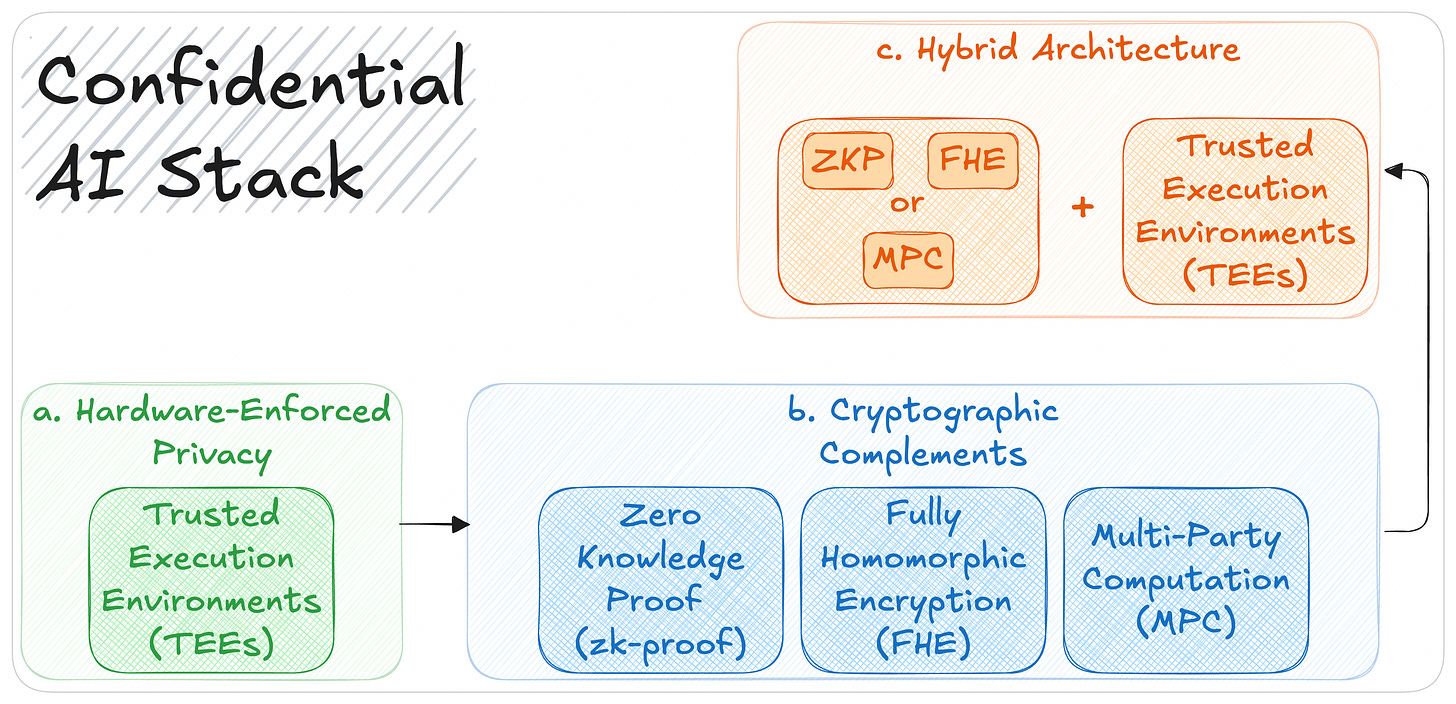

What Makes It Possible: The Confidential AI Stack

Confidential AI is made possible by a combination of hardware-based isolation and advanced cryptographic techniques. These are delivered by three key components, which we’ll walk through in the following order:

1. TEE (Hardware-enforced privacy)

TEE is a secure, isolated area inside a CPU or GPU where data can be processed privately. Think of it as a sealed room inside the chip. Once code runs there, it can’t be tampered with, and no one (not even the operating system or cloud provider) can see what’s inside.

For AI, this means inputs (like medical records), model weights (like proprietary LLMs), and outputs (predictions or scores) stay protected during the most vulnerable stage when the model is actively running.

By 2025, TEEs are built into most major processors: Intel SGX/TDX, AMD SEV-SNP, and Arm TrustZone.

The biggest leap for AI comes from NVIDIA’s H100 and Blackwell GPUs, which add confidential computing directly to high-performance inference, making it possible to run large models securely on shared hardware.

Performance tests show only a small slowdown:

Throughput drops by about 6–9%, and the time to generate the first token (the initial response) increases by roughly 20%.

After that first token, generation speed is almost the same as running without a TEE, and in some cases, like long prompts or batch processing, performance is nearly identical to non-TEE runs.

The main trade-off is that you still need to trust the hardware vendor and keep the rest of the system secure.

Bottom line: If you need real-time AI on sensitive data today, start with TEEs.

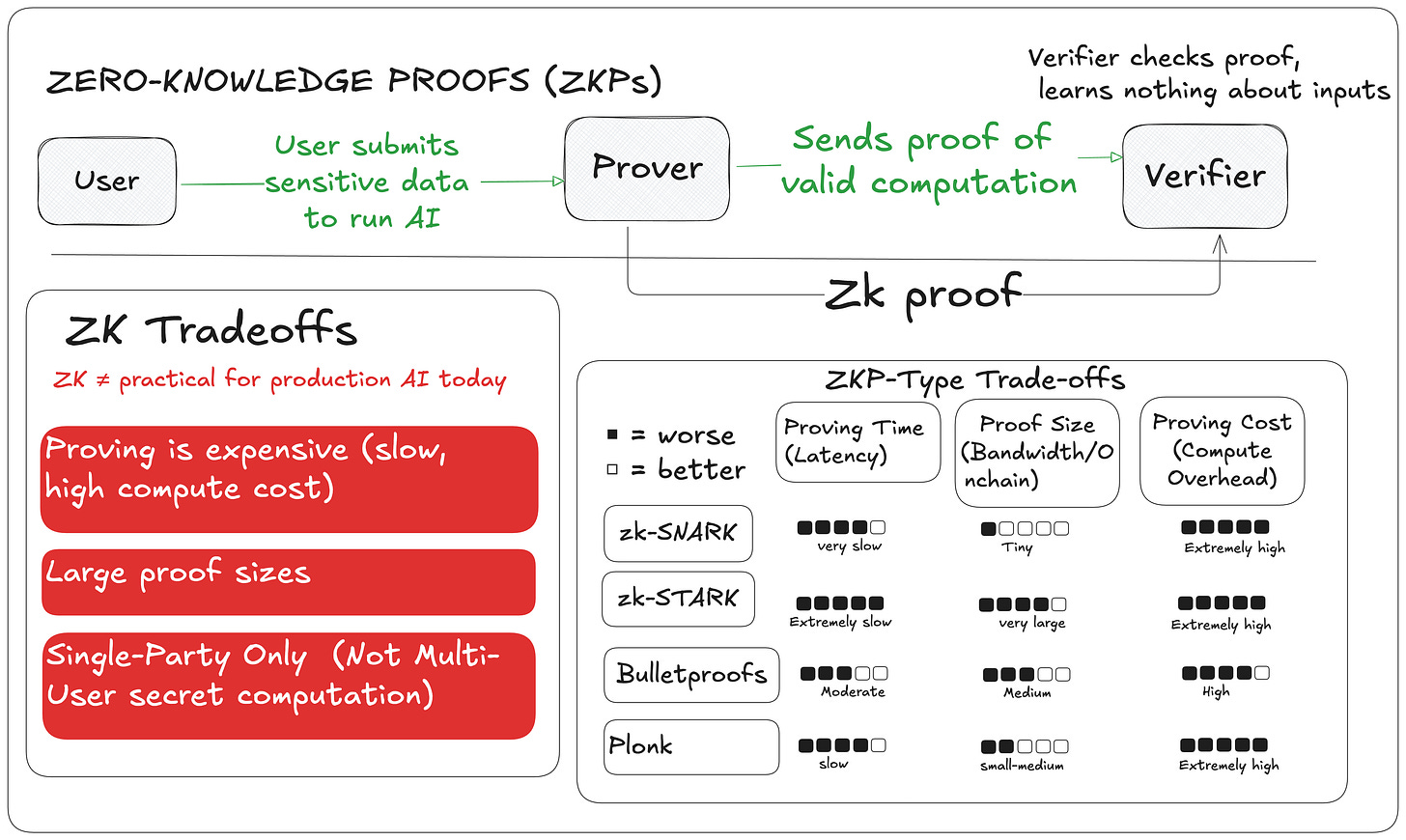

2. Zero-Knowledge Proofs (ZKPs)

ZKPs let a prover show a computation was correct without revealing the data, model, or code in the proof itself. In AI (“ZK-ML”), this means proving a model gave the right answer without exposing inputs or architecture.

Limitations:

Proof generation for deep models is slow (hours or days), resource-intensive, and produces large proofs, not practical for real-time inference.

And importantly, ZK only hides data in the proof; during proof generation (i.e., during inference), the server still sees all inputs and model details.

This mismatch is why there’s a growing trend to run ZK generation inside a TEE, combining ZK’s verifiability with TEE’s runtime privacy.

3. Fully Homomorphic Encryption (FHE)

FHE lets you run computations on encrypted data so plaintext never appears.

It’s the strongest form of privacy but extremely slow: often tens to hundreds of times slower than normal execution, and in some cases even more, depending on the model and operations.

Ciphertexts are large, and non-linear AI functions (like ReLU or Softmax) require workarounds. Today, it’s best suited for secure aggregation, search, or batch inference not for real-time AI.

4. Multi-Party Computation (MPC)

MPC lets several parties compute together without revealing their inputs to each other. It offers strong privacy but is communication-heavy, adding latency.

Best for small, simple computations with limited participants. Often used alongside TEEs or ZKPs to balance speed and privacy.

Hybrid Futures: Combining TEEs with ZK and Other Tools

Privacy tools work best in combination with fast, sealed execution from TEEs can be paired with cryptographic proofs or multi-party protocols to create systems that are both high-performance and verifiable.

For example, a TEE can serve an AI query in real time, then output a ZKP proving it ran the right code on the right data.

TEEs can also act as verifiable oracles by signing attestations of the code and hardware they ran, which can be posted on-chain for smart contracts to trust.

And with GPU TEEs like NVIDIA’s H100/H200, even model training can happen inside enclaves on private data, with ZK or MPC added for selective auditability.

These approaches are already being deployed in production. Across sectors, confidential computing is moving from research to real-world infrastructure, which brings us to the next question:

Who Is Using Confidential AI Globally?

Confidential computing is already running at scale, both in enterprise environments and decentralized ecosystems.

And in nearly every deployment, one foundational technology powers it all: TEEs.

Microsoft (Azure Confidential Computing): Runs TEE-backed VMs for sensitive workloads in healthcare, finance, and government. Used to securely train and deploy AI models on private data.

NVIDIA: Confidential compute is now built directly into its Hopper and Blackwell GPUs, making it possible to run large models securely with minimal performance loss.

Apple (Private Cloud Compute): A major industry milestone in confidential AI. PCC keeps user inputs and AI outputs encrypted even during processing, by combining a client-side TEE on the iPhone with a server-side TEE on Apple Silicon. Apple also enforces strict masking rules before data leaves the device, ensuring only the minimum necessary information is sent. The design has drawn wide attention, and other phone makers are now adopting similar architectures.

Amazon (AWS Nitro Enclaves): Offers isolated compute environments for secure operations, now being used to test confidential AI inference and data analytics.

Fireblocks: Combines TEEs with MPC to protect private keys and enable secure transaction signing for institutional digital assets.

Who Is Building Confidential AI in Web3?

Computation Networks:

Phala Network provides a decentralized GPU-TEE cloud, combining runtime privacy with ZK/MPC proofs, and was first to benchmark large-scale LLM inference in secure enclaves.

Mind Network adds an FHE-based confidential compute layer for AI agents, encrypted LLM inference, and its own MindChain blockchain (Mind Network is partnering with Phala Network).

iExec runs off-chain AI inside TEEs for privacy-preserving apps and DePIN coordination, with tools like iApp Generator for easy deployment.

Oasis offers Sapphire, a TEE-backed EVM, and ROFL for off-chain AI processing, powering projects like WT3, a trustless AI trading bot.

DeFi & Block Building:

Unichain builds blocks inside TEEs with encrypted mempools and priority ordering to block MEV.

Toki uses TEEs for cross-chain execution and confidential key exchange in bridges.

Custody & Key Management:

Clave secures wallets in GPU TEEs with passkey and biometric login for AI-driven finance.

Fireblocks blends TEEs and MPC to safeguard institutional assets and enable confidential AI-based transaction signing.

Emerging Players:

Super Protocol is creating a decentralized confidential AI compute marketplace. PIN AI focuses on privacy-first AI inference with runtime protection.

Fhenix brings FHE to Ethereum L2 for confidential contracts and AI logic.

Privasea integrates Zama’s FHE tools for privacy-first AI/ML workflows.

BasedAI combines FHE with zero-knowledge techniques for private LLM inference.

Across all of these examples, TEEs are doing the heavy lifting. Their speed, hardware-enforced isolation, and broad hardware support across CPUs and GPUs make them uniquely capable of handling sensitive workloads in production.

Why 2025 Marks a Big Shift in Confidential AI

The adoption of confidential computing has reached a measurable turning point in 2025, driven by 4 core developments:

Market size: Confidential computing is now a $9–10 billion industry, growing at over 60% annually.

Venture funding: Over $1 billion has been invested in infrastructure for privacy-preserving AI.

Hardware availability: Processors from NVIDIA (H100, Blackwell), Intel (TDX), and AMD (SEV-SNP) now ship with built-in secure enclaves. These are already used to run models with 70B+ parameters in production, with less than 7% performance overhead.

Cloud support: All major providers, AWS, Azure, Google Cloud, Oracle, Alibaba, Tencent, offer confidential compute instances using TEEs.

TEEs are currently the only solution that can handle real-time AI workloads with the necessary confidentiality and speed. Other cryptographic methods like ZKPs, MPC, and FHE are simply too slow for general, low-latency tasks.

However, TEEs have limitations: they depend on hardware vendors and require extra tools for verification. As a result, many systems now use a hybrid approach, combining TEEs for speed with cryptographic tools for correctness.

While not the final solution, in 2025, TEEs are the most practical foundation for confidential AI. The industry is now working to combine them with cryptographic proofs to boost transparency and trust without losing performance.

Hazeflow is a blockchain research firm with experience in research, analytics, and the creation of technical, product, and educational materials.

We work with blockchain teams (especially complex-tech ones) who struggle to clearly and meaningfully explain their complex product.